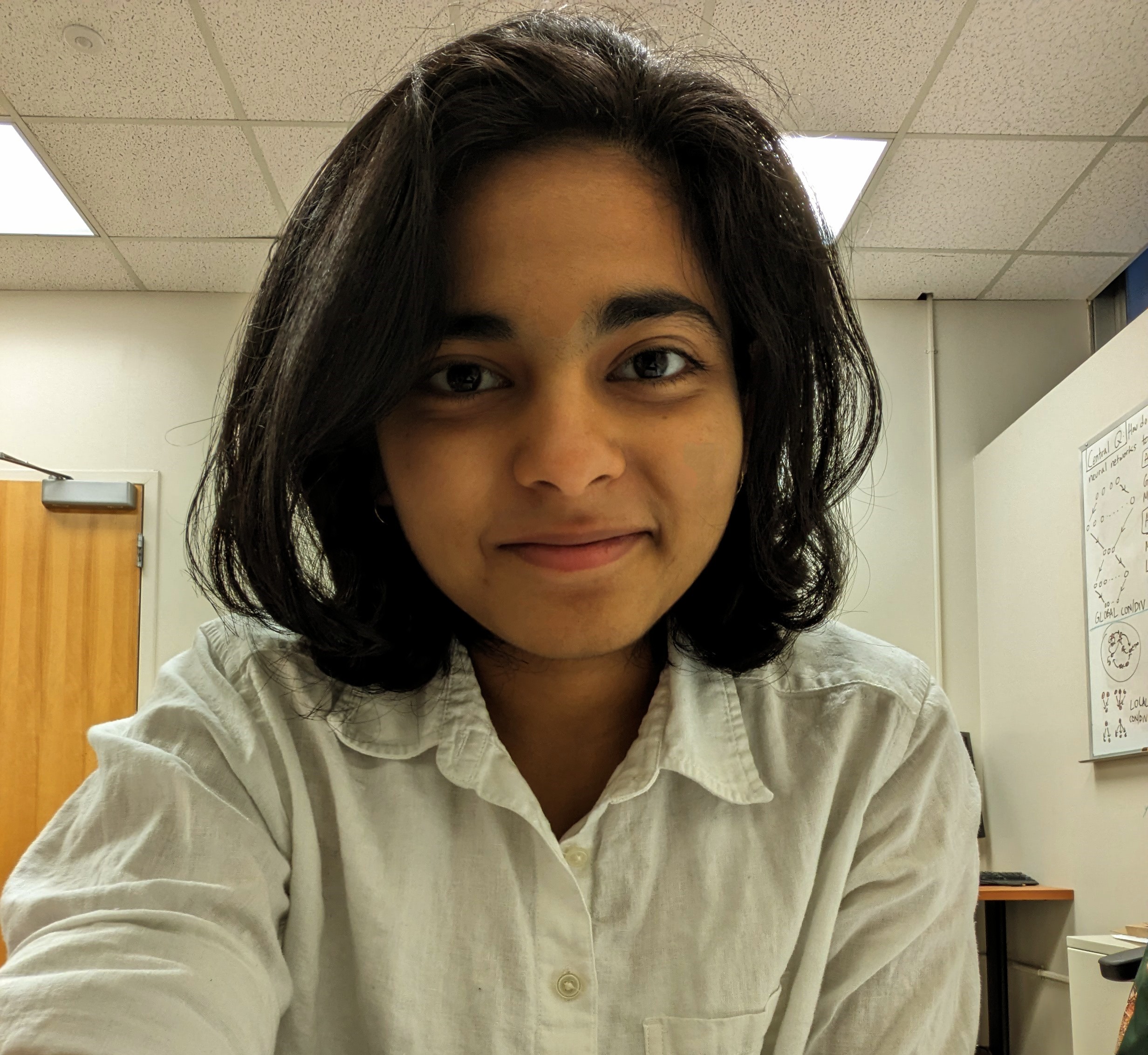

Aishwarya H. Balwani

Postdoctoral Research Associate, Department of Developmental Neurobiology @St. Jude Children's Research Hospital

Recent-ish News

- December 2025: Our paper on constructing biologically-constrained RNNs that respect Dale’s law while incorporating sparse connectivity motifs is now published at Science Advances! This work was also presented as a poster at COSYNE 2025.

- November 2025: Awesome new work (and my first as a senior author!) studying the geometric properties of emergent misalignment in the parameter space is up on arXiv! The work has been accepted to 3 workshops at NeurIPS 2025 and will be presented as an Oral at UniReps (along with an Honorable Mention 🏆) + has been given a Spotlight at MechInterp. Code and more are available at the project page! This work was carried out with one of my summer teams at Algoverse.

- November 2025: New preprint on evaluating LLMs as deep research agents 🤖🔍📜 is now up on arXiv! This work was carried out in collaboration with Scale AI. Learn more about the project on the ResearchRubrics website!

- September 2025: I’ve started my new role as a postdoctoral research associate at St. Jude Children’s Research Hospital in their Department of Developmental Neurobiology! I’m working with Dr. Stanislav Zakharenko on better understanding neural circuit mechanisms of schizophrenia and auditory processing at large, leveraging techniques in ML/DL with a focus on mechanistic interpretability and predictive coding.

- August 2025: Our paper studying the architectural biases of the canoncial cortical microcircuit has been published in Neural Computation! This work was also highlighted as a talk at COSYNE ‘23 in Montréal!

- April 2025: I participated in the 2025 Frontiers in Science Conference and Symposium at Georgia Tech (focused on Intelligence + Neuroscience + AI), and won a best poster award!

- February 2025: I am being supported by Open Philanthropy’s Career Development & Transition Funding through Spring ‘25 as I upskill in AI Safety and Alignment. Thanks, Open Phil!

- February 2025: I’ve successfully defended my PhD! My thesis is titled Through the RNN Looking Glass: Structure-Function Relationships in Cortical Circuits for Predictive Coding, and the dissertation document, defense talk, as well as slides are all accessible online!

About Me

I recently earned my PhD in Electrical & Computer Engineering at Georgia Tech, where my research interests broadly revolved around characterizing learning and intelligence in artificial and biological systems. Towards these ends, I spent my time studying both machine learning and theoretical + computational neuroscience, with a healthy smattering of many topics in applied mathematics. I was primarily advised by Dr. Hannah Choi and co-advised by Dr. Chris Rozell.

My thesis used RNNs as models for cortical circuits to understand structure-function relationships in the canonical cortical microcircuit under the purview of predictive coding, using a combination of tools from high-dimensional geometry, neuroscience, and signal processing. Additionally, it also developed methods to construct and train more biologically plausible deep learning models that are easy to scale and train with theoretical guarantees on learning efficiency and performance.

Ongoing Research

My research thus far often leverages structure (geometrical and topological) in the representations and architectures of artificial & biological neural networks so as to render them more interpretable and thereby discover their governing principles. Some ideas that I actively think about in these contexts are:

- Sparse, low-rank, and low-dimensional approximations of data.

- The effects of regularization & architecture on representations learnt by neural networks.

- Disentangling of data manifolds, the quantification of representations that live on them, their evolution across space-time, and the changes that different perturbations bring about in them.

- Generalizability and potential for transfer of representations across different tasks and domains.

My long-term goals of understanding learning + intelligence combined with my facility for math & engineering have led to the following (somewhat) more tangible goals that I try to actively contribute to with my research:

- Development of mathematical models and computational methods that effectively characterize as well as elucidate changes in structure, organization, and information processing in deep neural networks and the brain across different stages of development.

- Development of “better” ML/AI systems that require less supervision and/or data, learn progressively, and are more amenable to changes in task structure (along with supporting foundational theory or guarantees whenever possible!)

- Tackling AI Alignment from first principles, i.e., trying to fundamentally ensure deep learning models as and when deployed, do what we want/intend for them to do (relatively recent foray!)

Interests & Hobbies

Machine Learning: Unsupervised/self-supervised learning, dimensionality reduction & manifold learning, metric/similarity learning, and learning with structured sparsity.

Mathematics: Matrix & tensor decompositions, column subset selection, low-rank approximation, metric embeddings, convex geometry, optimization, group & representation theory, differential geometry & topology, and information geometry.

Neuroscience: Neural (i.e., population and sparse) coding, predictive coding, synaptic plasticity & learning rules, models of brain structure & organization, and connectomics.

Outside of academic and scientific pursuits, my hobbies include reading 📖, listening to + studying classical music 🎼, watching + playing racquet sports 🎾, trying (and often failing) to keep up with cool movies + TV shows 🎥, and solving every Rubik’s cube variant 🎲 I can get my hands on.

I am incredibly fond of cats 🐈, enjoy history of almost any kind 📜, still identify as an ardent Federer fan 💜, and remain a Bombay kid 🌏🏠👶 at heart for life. 🌈.